Cryptography at Crosshairs II: Differential Power Analysis. Differential analysis Differential studies

Ph.D., psychotherapist Goncharov M.A.

Despite the achievements of modern psychotherapy, the problem of rehabilitation of suicides remains quite serious. There are many theories of suicidal behavior. But none completely reveals all the secrets of this phenomenon.

Fortunately, the time when the phenomenon of suicide was treated as a phenomenon of a clinical nature has long passed. There are so many theories and so many approaches to therapy. Of course, our attitude to the problem of suicide is largely determined by our vision of man and theoretical orientation.

Suicidal behavior is unique to humans. Not a single living creature suffers by choosing death as a solution to a problem.

According to S. Freud, “everything living strives for death, for primary inorganic existence; suicide is a psychological act, the driving force of which is the death instinct.”

A. Adler claims that “the desire for death is a defensive reaction in the form of more or less conscious revenge on oneself or another person. Through suicide, a person overcomes childhood inferiority complexes and asserts himself.”

Steckel viewed “suicide as the result of self-punishment in the event that the subject had a culturally suppressed desire to kill another person.”

K. Meninger - “Suicide as a manifestation of complexes of sadism and masochism, a way of punishing the Ego by the Super-Ego.”

G.I. Gordon saw suicide as “a weakening or complete disappearance of the vital tone or instinct of life.”

Sociologist William Irwin Thompson: “People are not objects like tables and chairs, and if they find their lives reduced to the mere existence of tables and chairs, they commit suicide.”

According to Ringel (1978), the suicide attempt is “the completion of a painful development.”

For Amery (1979), the act of suicide is evidence of human freedom, which distinguishes man from animals. According to Battegay (1981), on the contrary, when committing suicide, there can be no talk of any free decision.

According to the concept of A.G. Ambrumova, suicidal behavior is a consequence of socio-psychological maladaptation in the context of an experienced microsocial conflict and is one of the types of general behavioral reactions of a person in extreme situations, as it occurs across the entire range of diagnostic variations - from mental norms to severe pathology.

Everyone wants to go to heaven, but no one wants to die.

Suicidal behavior itself is rarely a desire to end up in the grave. A suicide attempt means less a desire for death than a challenge to the world around us. Berdyaev said that a person never denies life itself, he denies that moment of life that makes this life unbearable. Consequently, suicidal behavior itself is not a cause, but a consequence, i.e. symptom. The reason is a conflict that is not always on the surface.

Positive psychotherapy or differential analysis is a conflict-centered method of psychotherapy with a humanistic image of a person, therefore the work is focused specifically on finding the conflict “that makes life unbearable.” It makes little sense to treat suicidal behavior at the symptom level. This is about the same as repairing a car by painting over scratches on it. The transition from symptom to conflict is the main step in psychotherapy in general, in working with suicidal behavior in particular. The method of positive psychotherapy got its name from the Latin word “POSITUUM”, which means: factual, given. Suicidal behavior is not the only fact and data. The problem with this approach is that a disorder is not a monster alien to a person with a life of its own, but a dynamic state of a person, his reaction to environmental influences. In positive psychotherapy, the focus is not so much on the disease, symptom or problem, but on those characteristics (actual abilities) of the individual, the conflict of which caused dysfunction and those that will help cope with the situation.

Positive psychotherapy takes a symptom as a response to changes in the external environment or internal experiences available to a given person in a given situation. By understanding the abilities that lead to this response and how they originate in development, we can change these abilities through education and training. As a result, the reaction changes and the symptom disappears.

Differential analysis in PPT comes down to several main points:

1. Positive interpretation of the problem

2. Determining the scope of the conflict

3. Determination of the content of the conflict (microtrauma theory)

4. Definition of the basic conflict

5. Verbalization

6. Moving beyond neurotic repetition (stereotypes)

7. Development of self-help skills in problem solving

One of the important tools of positive psychotherapy is the positive presentation of symptoms. This is not putting on rose-colored glasses or denying or discounting the severity of symptoms. And the opportunity to consider what specific aspirations, needs or abilities are behind the symptom. Positive reinterpretation also allows us to outline the paths of personal development determined by other positive abilities, thus transferring search activity from painful to adaptive ways of communicating with the world and oneself. For example:

. Depression is the ability to react extremely emotionally to conflicts.

. Fear of loneliness is the need to communicate with other people.

If you think about what it means to “take your own life,” you can find some positive aspects. If a person “takes life from himself, it means that he comprehends life, masters it, he adapts it to himself. A new beginning is possible here. “To take one’s own life” means to question one’s life and change one’s views on it. (N. Pezeshkian). In addition it could be:

. The ability to end an unbearable situation

. Ability to get rid of pain

. The ability to overcome fear

. The ability to master life

. The ability not to be a slave to the situation

. Ability to connect with the dead

. Ability to attract attention

. Ability to isolate

. Question life

. Change your location

We focus on the positive aspects of suicidal behavior before addressing the negative ones. Attempts by a psychotherapist to follow a standard emergency intervention procedure without a reasonable diagnosis can be antitherapeutic and even dangerous, since it may lead such a patient to believe that in order to be heard, one must demonstrate, rather than simply talk about, suicide. Such cases also leave the therapist with a feeling of hatred toward the patient because the patient appears to be asking for help and then rejecting sincere attempts to help him (Frank et al., 1952).

According to Ambrumova, there are several main areas of conflict:

1. Family-personal

2. Mental health status

3. State of physical health

4. Conflicts in the professional sphere

5. Conflicts related to antisocial behavior

6. Material and everyday difficulties

These spheres can be distributed on the balance model according to N. Pezeshkian. At the same time, it is clear that the sphere of spirituality and existentiality is not taken into account. They are likely attributed to a mental health condition. N. Pezeshkian's model shows imbalance in four areas of life. Which areas of life are given special importance, and which remain in the shadows.

But, despite clarification of the conflict area, the content of the conflict remains hidden.

First, the area of conflict is identified, and then the preferred way of responding to the conflict is explored. A long-term imbalance will inevitably lead to certain violations. There are two main reasons leading to an imbalance with “flight into fantasy”:

1. The areas are simply undeveloped (lack of experience)

2. They have accumulated too many conflicts (negative experiences).

This can be seen in the following example:

Two patients can be clinically depressed and at the same time have almost the same vegetative manifestations: insomnia, loss of appetite, tearfulness, psychomotor retardation, and so on. But they differ radically in their subjective experiences. One feels bad in the sense of his moral imperfection. He contemplates suicide because he believes that his existence only exacerbates the problems of the world, and he will only be doing the planet a favor by ridding it of his evil influence. The other feels not so much immoral as internally empty, defective, ugly. He also thinks about suicide, but not in order to improve the world - he does not see the meaning in this life. The first experiences a burning feeling of guilt, the second - all-encompassing shame (Blatt, 1974). In the language of object relations theory, the former is filled with internalized objects telling him that he is bad; the second is devoid of internalized objects that could guide him.

The diagnostic distinction between the first type of depression and the second is very important for practical reasons. The first type of depressed client will not respond to the therapist's overtly sympathetic, encouraging tone; he will believe that he was taken for a person more worthy than he really is, and will be even more depressed (ironic depression). The second type of depressed person will experience a great sense of relief when there is an open expression of support and understanding; his emptiness will be temporarily filled and the agony of his shame will be softened.

Differential analysis. Identification of “actual abilities”, i.e. qualities, properties that describe the constant characteristics of human behavior, allows us to consider any conflict as the result of a collision not of personalities, but of individual characteristics of behavior that are not absolute and fixed, but can change and develop. Thus, the area of conflict is localized, its content is determined, the tension and fatality of the situation are removed, and the path to change turns out to be obvious and realistic. The development of the ability to distinguish, explore and change actual abilities constitutes the main therapeutic power of differential analysis.

There are two main types of actual abilities:

PRIMARY abilities develop from the basic ability to love. They arise from the first day a person is born due to contact with loved ones. Primary abilities are not more important than secondary ones or vice versa. They are the basic phenomenon, the foundation, the emotional content on which secondary abilities are built. Primary abilities describe the life emotional experience that is acquired in connection with the implementation of secondary abilities.

SECONDARY abilities are acquired through the assimilation of communicated knowledge. They reflect the norms of behavior of a social group that stimulates or suppresses (with the help of primary abilities, or, more precisely, the satisfaction of primary needs) certain actions.

Current abilities characterize the most important parameters in the formation of a person’s character, the content and motives of human behavior, interpersonal interaction, conflict genesis and therapy. Depending on the conditions, abilities develop unevenly and differently in different people. Some of them may be developed to the point of virtuosity, while others may remain in their infancy. You can, for example, love order, but be impatient.

Inconsistency between the expected (“correct”) and the actually existing (“positive”) development of one’s own or others’ current abilities can cause micro- (and macro-) traumas, conflicts, problems, disputes and, as a result, conditions such as anxiety, fear, sleep disorders, aggression, etc. Suicidological disorders can be based on both constantly repeating and potentiating microtraumas from a collision in the area of differences in certain actual abilities, and macrotraumas. 10 events from the past 5 years can help determine the nature of the injury.

Current abilities are also examined depending on specific symptoms. In principle, any actual abilities can be affected, although only if they have already acquired the character of a symptom and thereby received a negative assessment.

One of the diagnostic tasks is patient identification. The suicide may be a so-called “symptom carrier”, but the participants in the conflict have not been identified.

If the four domains of conflict processing are correlated with cognition, e.g. with those areas through which we enter into relationships with reality, then the dimension of relationships is determined by the ability to love, which also develops in interaction with the world around us. Based on the nature of the relationship, access to opportunities for emotional expression opens up. In positive psychotherapy, it is believed that in a social context, the development of basic personality abilities (love and cognition) occurs under the influence of four formative factors of the role model (Fig. 2):

Following the client-centered approach of C. Rogers (Rogers C.R., 1951), positive psychotherapy postulates that human development occurs under the influence of a basic need for a positive evaluation of a significant environment (love). Satisfaction or frustration of this need over time begins to be perceived as an internal, personal experience (cognition), separated from the social environment, i.e. like self-esteem. Various spontaneous manifestations are encouraged or suppressed by others to varying degrees. In order to maintain a positive assessment, a person adjusts and falsifies his experiences. Thus, significant others, their relationships and attitudes become a role model. These primary concepts of primary socialization constitute the defining basis of personality, which can be supplemented by new experiences. It is the ability to supplement primary concepts with new experience that allows us to learn, change and improve.

“I” - If the natural manifestations of personality are predominantly frustrated by a significant environment, an attitude is formed towards oneself as incapable (ability to know), not loved, not valuable (ability to love) for others (lack of basic trust according to Erikson (1950).

“You” - The result of frustration of the capacity for love is rejection, and the capacity for cognition is the inability to set boundaries at all or the establishment of too strict boundaries (as overcompensation).

“We” - the result of conflict in this concept is a feeling of dependence on others or a socially phobic reaction.

“Prime-We” - The result of the conflict in this concept is the lack of meaning, lack of one’s own worldview, the need for constant leadership, and dependence on short-term goals.

The problem of repeated suicidal actions remains one of the most pressing problems in modern psychiatry. In this regard, an important stage of therapy is the development of self-help skills. The five-step strategy is an opportunity to stop running in place and develop a focused investment of energy in solving problems.

1. Observation/distancing.

2. Inventory.

3. Situational encouragement.

4. Verbalization.

5. Expansion of the goal system.

The goal of the fifth and final stage of therapy is to develop the ability to invest energy not only in problems, but also in other areas of life. The patient's readiness to act independently is discussed. Four areas of conflict processing are best used as a guide for expanding goals. Any live interaction with a partner who has a different concept contains a transcultural approach and the potential for expanding goals: What would you do if you no longer had problems? What are you dreaming about? and so on.

Conclusions:

- Conflict is more often in the sphere of contacts

- Prevalence of microtraumas over macrotraumas

- Individualistic approach to problem solving prevails

- Long-term waste of energy on solving a problem without a clear goal

- “Escape into fantasy” is the most preferred way of processing conflict

- Lack of conscious attitude towards issues of meaning (Pra - we)

- Deficit of differentiation

- Lack of positive experience in solving problems

DTA is based on the regulation of changes in the temperature of a sample of the material being studied when it is heated or cooled. When carrying out TA, the sample is placed in a special crucible and then placed in an electric furnace. After this, the crucible along with the sample is smoothly heated with a certain temperature. heating rate and at specified time intervals register. its te-ru.Measurement results using. to construct a graph of the temperature of the sample versus the heating time.

T-temp; t-time => T=f(t)

This is called the temperature of the heating curve. If, during the heating process, some transformation (chemical reaction) occurs in the substance under study, which is associated with the absorption or release of heat, then T = f (t) is more or less noticeable deviates from the straight direction:

Differential heating curves.

This type of analysis is carried out to accurately determine the thermal effects that occur in samples during heating (cooling). Most often, heating. This type of analysis is carried out using a differential thermocouple, which consists of 2 identical thermocouples, connected towards each other. The thermocouple consists of 2 dissimilar conductors, soldered to each other on one side. The conductors are made of different types of inter-alloys based on platinum, radium, chromium, nickel, copper. To make a tercopair, two wires are welded together in point contact, it is called a junction

A minivoltmeter device will measure the connection to the free ends. If there is a temperature difference between the junction and the free ends in the thermocouple, the EMF is proportional to the temperature difference. Knowing the EMF and the temperature of the free ends, determining the temperature of the junction, the cat is placed in the object being studied. In practice, standard thermocouples made of standard alloys are used, for which there are calibration tables. In the case of recording DTA curves, a trace diagram is used. The junction of the first thermocouple is placed in a crucible with a sample, and the junction of the other thermocouple is placed in a crucible with an inert substance (with a standard) .Both crucibles are placed in an electric furnace and heated in the same mode.

Platinum-radium platinum thermocouples are most often used, with 1 wire made of platinum and 2 wires made of an alloy of platinum and radium. The mV1 millivoltmeter is used for measuring the temperature curve, i.e. measuring the temperature of the sample during heating. The mV2 millivoltmeter is used to adjust the DTA curve, showing the temperature difference between the sample and the standard. If no changes occur in the sample during heating, it is associated with the release/absorption of heat , then the temperatures of the sample and the standard are the same => The emf of both thermocouples is also the same and cancels each other out, and the mV2 device shows 0.

If any processes occur in the sample related to the absorption/release of heat, then its temperature will become either greater or less than the standard temperature, the emf of the thermocouples will differ and will not compensate for each other. mV2 device in this case, it will show the value of the EMF, the proportion of the thermal effect, and the polarity of this EMF will show the direction of the effect, i.e. exo/endo effect.

A DTA curve is a set of peaks directed up or down relative to the zero or baseline lines.

Recording DTA curves made it possible to clearly determine the beginning, maximum and end of the thermal effect. In order to determine the temperature at which place the thermal effects take place, simultaneously with the DTA curve, recording the temperature curve T, the projection onto the cat allows you to determine the temperature at any point.

Combination thermocouples

A thermocouple is a temperature measuring device that consists of two wires made of dissimilar materials with one or two contacts.

The conductors are made from different types of alloys based on platinum, radium, chromium, nickel, copper. To make a tercopair, two wires are welded together in point contact, called a junction

Most often we use platinum-radium platinum thermocouples, in which 1 wire is made of platinum, 2 wires are made of an alloy of platinum and radium.

Advantages of thermocouples

High accuracy of temperature measurement (up to ±0.01 °C)

Large temperature measuring range: −200 °C to 2500 °C

Simplicity

Cheapness

Reliability

Flaws

To obtain high accuracy of temperature measurement (up to ±0.01 °C), individual calibration of the thermocouple is required.

Thermogravimetric analysis (TGMA)

When heated, effects occur in many materials that are associated with a change in the mass of the sample. For example, dehydration, dissociation, decomposition, etc. In order to obtain IO thermal behavior and materials, use a recording of the change in mass over time during the process heating in the form of THM curves. Recording is carried out using a thermobalance. In the simplest case, the crucible holder with the sample is placed on 1 of the arms of the analytical balance beam. This allows the measurement of assemblies during the heating process.

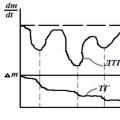

Usually the TG curve and the view of a separate plateau are divided by steps.

The presence of a plateau indicates that the mass of the sample is not changing. Step display. loss of mass, and its height is proportional to the loss. In some cases, thermal effects with masses can overlap, i.e. overlap each other. In this case, the TG curve, called integral (showing the change in m from the beginning to the end of heating) does not allow us to quantitatively separate such processes. For this, we use a record of the change in a differential form, i.e. simultaneously recording a DTG curve, showing the rate of change in m. On such a curve, each effect of change in m is displayed as a negatively directed peak.

The area of the subpeaks of the DTG curve is proportional to the magnitude of the change in m.

The use of differential recording makes it possible not only to accurately determine the temperature of the beginning, maximum and end of a thermal effect, but also to quantitatively separate thermal effects that overlap each other.

1-crucible with sample;2-crucible holder;3-electric furnace;4-yoke of scales;5-core;6-cylindrical coil;7-flat coil;8-permanent magnet

If a loss of m occurs in the sample, the balance beam will shift, setting the core in motion, which will move inside the cylindrical coil, changing its inductance. The signal from the coil will be amplified and recorded in the idea of the TG curve. Flat coil and magnet is an electrical/mechanical differentiator. If a coil moves between the strips of a magnet, an EMF is induced in it; its velocity is greater, the higher the speed of movement of the coil. When the rocker arm is displaced, the coil begins to move and the EMF arising in it is amplified and recorded - in the form of a DTG curve. The faster the loss of m occurs, the greater the EMF and the more intense the peak on the curve.

Diff. Thermogravimetric curve (DTG)

Typically, DTG curves are more reproducible than DTA curves. Using DTG curves, the temperatures of the beginning and end of a chemical reaction are more accurately determined, and from the peak of the DTG curve, the maximum reaction rate is determined with great accuracy. The DTG curve allows for max peaks better distinguish overlapping stages of the reaction, and the area between the peak of the DTG curve and the zero line corresponds to the change in m of the sample as a result of the occurrence of individual stages of the process.

101.Amperometry– this is the area of \u200b\u200bvolt-ampere measurements of electro-chemical systems, where potentials are applied between a pair of electrodes. The current flowing through the electrode-liquid interface depends on the electrochemical reactions occurring at the interfaces.

On the current-voltage characteristic there is usually a region (plateau - the area of application of voltammetry) where the current is practically independent of the applied voltage. The current in this region increases as a result of the electrochemical reaction in proportion to the concentration of the reacting agent. (Drawing)

Diagram of an amperometric O2 sensor:

Amperometric sensors are used for gas analysis (for example, O2 analysis). Ag or Pt is used as a working electrode, and Pb, Zn or Fe is used as a reference electrode.

Chemical reactions involving gaseous oxygen take place in a liquid electrolyte:

O2 + 4H+ + 4e = 2H2O

2H2O + 4e → 4OH-

93.94. Qualitative and quantitative thermal analysis. Determination of chemical purity. substances using the DTA method (differential thermal analysis).

DTA allows you to determine the nature and number of phases in layers of natural minerals, ores, and salts. Mechanical components mixture of components is detected by the thermal effects characteristic of each ind. substance. The effects of melting and boiling depend on the presence of impurities and therefore cannot serve to identify the substance in the mixture. If t effects coincide on the thermograms of mixtures, these v-you.b. identified by the effects of decomposition, polymer transformations, etc.

If the components of the system interact with each other chemically. compounds, this leads to a change in the character of thermograms (the appearance or disappearance of effects inherent in individual substances). This serves for quality. definition of individual phases and transformations in a complex system.

Using the method of differential thermal analysis using appropriate equipment, it is possible to determine the temperature at which thermal reactions occur in a substance when it is gradually and continuously heated to a high temperature, as well as the intensity and general nature of such reactions. In the case of clay minerals, differential thermal analyzes show characteristic endothermic reactions caused by dehydration and destruction of the crystalline structure, and exothermic reactions caused by the formation of new phases at higher temperatures.

This method is used not only for the study of clays, but also for the study of carbonates, hydrates, sulfides, organic compounds and any substances in which, when heated, thermal reactions are observed that begin suddenly and occur in a relatively short temperature range. When studying clay minerals, it is useful not only for studying high-temperature reactions, but also for studying the dehydration process.

The results of differential thermal analysis are expressed as a continuous curve that records the thermal reactions occurring at the appropriate oven temperature. It is accepted that during endothermic reactions the curve sharply deviates downward, and during exothermic reactions it deviates upward from the horizontal zero line. The amplitude of the deviation of the differential curve from the zero line reflects the temperature difference between the sample and the oven at any given temperature and is an indicator of the intensity of the thermal reaction.

In fig. 71-76 show the differential heating curves of various clay minerals.

History of the method. Le Châteaulieu was the first to use a simple device in 1887 to measure and record the thermal reactions occurring in a material when heated. He used the data obtained in the study of clayey materials.

In 1910, this method was first used in the study of clays. In this early period, the research procedure was as follows: the substance was placed in a small platinum crucible; The hot junction of a thermocouple was placed in the center of the substance under study. The crucible with the substance and thermocouple were placed in a furnace and heated quickly and relatively evenly. The thermocouple was connected to a galvanometer, the readings of which were read visually or photographically at short intervals. Thermal reactions in the test substance caused deviations in the galvanometer readings, which were detected when compared with readings obtained under the same conditions, but without a sample. The recording revealed thermal reactions in the substance superimposed on the idling heating curves of the furnace. Examples of such records are shown in Fig. 77.

In 1899, Roberts-Austen first used the differential thermocouple method to measure the temperature difference between a test substance and a corresponding standard, and in 1908, Burgess proposed a simple and quite suitable differential thermocouple circuit. After the work of Roberts-Austen, the differential thermocouple began to be widely used in metallurgy, but only in 1913. Fenner was the first to use it in his work to study stable equilibria in silicate minerals. The technique proposed by Fenner is still widely used today in differential thermal analyzes of minerals. It has undergone only minor changes and improvements. Following Fenner's research, this method was used by Krachek et al. in the study of high-temperature phase changes, and even more often in the study of the mineralogical composition of clays. After the brilliant work of Orcel, Orcel and Cayer in 1933-1935. The method of differential thermal analysis began to be used in the study of clay minerals by many researchers in different countries. Recently, it has been successfully applied in the study of such groups of minerals as carbonates, sulfates, hydrates, etc.

Description of the method. Currently, a setup is usually used that allows the test sample to be placed in one hole of the crucible, and an inert material (usually calcined aluminum oxide (a*Al2O3), which does not give any reactions when heated to the maximum temperature of the experiment) in the other. One junction of a differential thermocouple ( Fig. 78, a) is in the center of the test sample, and the other is in the center of the inert substance. The crucible and thermocouple are placed in a furnace, which is heated at a uniform rate. The temperature of the inert substance increases uniformly in accordance with the increase in the temperature of the test sample. Once in the sample a thermal reaction will occur, the temperature of the sample will become higher or lower than the temperature of the inert material depending on whether the reaction is exothermic or endothermic. The temperature difference remains until the end of the reaction until the temperature of the sample equalizes and again becomes the same as the temperature of the furnace. Therefore, at certain intervals, the temperature of one junction of the differential thermocouple will differ from the temperature of the other junction and an electromotive force (e.g.) will appear in the differential thermocouple circuit. d.s.), which is a function of time or oven temperature. Recording change e. d.s. can be done manually using a potentiometer or galvanometer, photographically using a mirror galvanometer, or automatically using some electronic device. If no thermal reaction occurs in the sample, the temperature of the differential thermocouple junctions is the same and no potential difference occurs. The direction of the current in the circuit depends on whether the temperature of the sample is higher or lower than that of the inert substance. Therefore, the writing mechanism moves in opposite directions during endothermic and exothermic reactions.

In fig. Figure 79 shows the dehydration curve and the differential heating curve of kaolinite, which are in full agreement. An endothermic reaction between 500 and 700° obviously corresponds to dehydration of the mineral. From a comparison of the above curves it is clear that the differential method is dynamic rather than static. Thermal reactions are not instantaneous and are recorded as a function of time or as a function of the oven temperature, which continuously increases while the reaction is occurring. The temperature at which dehydration begins corresponds to the onset of an endothermic reaction. The temperature of the endothermic peak varies depending on a number of factors associated with the analysis, the nature of the reaction and the substance being studied.

Spiel, Kerr and Culp and Ahrens attempted to mathematically analyze the method of differential thermal analysis. From the description of the equipment used, as well as the factors influencing the results, it is clear that the method has limitations that do not allow for strict mathematical processing of the results obtained.

Recently, a subcommittee of the International Committee for the Study of Clays, chaired by R.S. Mackenzie (Aberdeen, Scotland) has attempted to identify the inherent limitations of this method and to establish standard techniques and apparatus. The committee's work should help improve differential thermal analysis.

Equipment used. The sample crucible used in the USA is a nickel block with drilled holes to hold about 0.5 g of sample. Experience has shown that such blocks are quite suitable and produce quite sharp and intense peaks on thermal curves. Ceramic crucibles are widely used in England. Grimshaw et al. claim that they are more convenient as they produce more intense and distinct thermal reaction peaks due to the slower temperature propagation between the test sample and the surrounding material due to the low thermal conductivity of the ceramic material. Grimshaw used a crucible of recrystallized alumina mixed with a small amount of clay, calcined at 1600°. Le Chatelier's early work used platinum crucibles to hold the sample. According to Gruver, samples should be placed in a thin-walled platinum crucible, since the high thermal conductivity of the metal allows the substance to quickly heat up to furnace temperature, and thin walls have a low heat capacity. In contrast to Grimshaw, Gruver believes that a thick-walled crucible with a higher heat capacity acts as a heat reservoir and tends to reduce the intensity and clarity of some reactions. Ahrens states that a ceramic crucible produces sharper endothermic peaks and less sharp exothermic peaks, while a nickel crucible produces comparatively smaller endothermic peaks and sharper exothermic peaks. It may be necessary to use different types of crucibles for different samples.

In fig. 78, 1 shows a diagram of the currently most widely used thermocouple with an additional thermocouple for measuring the temperature of the furnace during its calibration. In fig. 78, 2 shows a diagram of a thermocouple, first used by Fenner and still used by some researchers, especially in Europe. In the latter scheme, the sample temperature is most often taken as the reference temperature.

MacKenzie and later Ahrens showed that measuring the kiln temperature directly on a clay sample had the advantage of producing peak temperature readings that were much easier to compare with each other. As will be discussed below, a number of factors can lead to a change in the peak temperature of the reaction, therefore, it is not yet possible to give preference to any method.

Perhaps the best results are achieved by calibrating to the actual oven temperature. Early researchers used thermocouples made of platinum with 10% rhodium; These types of thermocouples are widely used today. For many materials, chromel-alumel thermocouples have been successfully used at temperatures above 1000°. Their advantage over noble metal thermocouples is that they provide a higher potential difference and, therefore, greater sensitivity. Krachek used a gold-palladium and platinum-rhodium thermocouple, which also develops a high potential difference at high temperatures. In order to maintain a horizontal zero line of the differential heating curve, it is necessary that the thermocouples be the same size and centered on both the test sample and the control substance. The thermocouple wire should have a relatively small diameter (about 0.5 mm) to reduce heat loss as it passes through the wire.

For different substances, the intensity of thermal reactions varies greatly. Therefore, it is important to have a means (for example, different resistance in the differential thermocouple circuit) that allows you to change and control the sensitivity of the installation. The furnace must be such that the desired rate of heating to the maximum temperature can be achieved, and its working area must be of such a size that a uniform heating zone is created for the sample. There is a wide variety of ovens, both horizontal and vertical, that have proven their worth. The choice of furnace type largely depends on the wishes of the analyst. To ensure uniform heating of the oven, a large number of different handicraft and automatic types of program thermostats were used. For furnaces with alloy winding, an autotransformer turned out to be quite suitable, driven by a motor through a speed retarder in such a way as to gradually and continuously increase the voltage in the furnace. Many models of automatic thermostats have been produced, all of which have also proven to be suitable.

The thermostat must be specially adapted for this oven. You need to be careful with automatic types of regulators so that their pulses are not recorded by the differential thermocouple.

Differential thermocouple readings can be obtained visually using a galvanometer and potentiometer. Continuous recording can be obtained photographically with a mirror galvanometer or using various automatic devices produced by industry. When using thermocouples made of noble metals, it is necessary to use automatic devices with correspondingly varying sensitivity, which are sufficiently stable and give reliable readings. This is important because the measured temperature differences are very small, and the potential difference obtained from noble metal thermocouples is also small. In some laboratories, a photopen with a mirror galvanometer is very successfully used as a recorder.

Grim and Rowland compiled curves for their equipment that allowed a correlation between the height of thermal effects, the amplitude of the curve and the temperature difference during the reaction (Fig. 80). The curves are based on galvanometer deflection measurements for known temperature differences. By using these curves in conjunction with differential curves, it is possible to measure the difference in temperature represented by peaks of varying heights.

The influence of various characteristics of the test substance and experimental conditions on the experimental results. Differences in equipment have been shown to influence the results of differential thermal analysis.

Differences in experimental technique, as well as the nature of the substance being tested, can cause significant variation in differential heating curves. The temperature at the junctions of a differential thermocouple depends to some extent on the thermal diffusivity of the material in which they are placed. The rate at which a point in a hot body will cool under certain surface conditions is known as thermal diffusivity and is equal to K/dcp, where K is conductivity, d is density and cp is specific heat. The conductivity of the sample may differ from the conductivity of the inert material, and, moreover, it may change when heated due to the formation of new phases at high compression temperatures of the sample. Such changes in the differential curve will give either a break in the zero line or a sharp deviation of the curve (sharp bend) depending on the rate of change. In the quartz curve (Fig. 76), due to the difference in conductivity, a sharp rise in the main line is observed after the transformation of a-quartz into beta-quartz.

The inert material used must have constant specific heat capacity, thermal conductivity and thermal diffusivity, just like the sample under study. It should also not experience thermal reactions during analysis. Calcined alumina (a-Al2O3) turned out to be the most suitable as an inert material; sometimes calcined clay was used. However, calcined clay may have different conductivity from natural clays, therefore, by using it, we gain very little. In addition, thermal reactions occur in some cases in calcined clay due to reversible phase changes.

Samples are usually placed in a crucible at normal pressure. The sample and the inert material must be placed and prepared in the same way. The value of the sample tab varies depending on the nature of the material. This is especially important for light, loose materials; less important for relatively fine-grained material with different grain sizes, in which compaction occurs naturally.

As far as packing the sample is concerned, it should be noted that the distribution of different particle sizes in the sample is usually not important unless the overall material is very coarse (+60 mesh) or very fine (2 u). It is important to know the order of particle sizes that allow appropriate compaction of the sample.

According to Spiel et al., thermal curves vary depending on the particle size of the substance, especially when the maximum particle size is around 2 u. In general (FIG. 81), the size of the thermal reaction and the peak temperature decrease with decreasing particle size. For some materials, a decrease in particle size is accompanied by a decrease in the degree of crystallization, which is reflected in the differential heating curves (leading to a decrease in the intensity of reactions and a decrease in the peak temperature). According to Ahrens, if the particle size is slightly larger than 20, the surface area of the particles is too small for the dehydration reaction to occur quickly enough to cause noticeable effects when recording a differential heating curve (Ahrens). Reactions associated with phase transformations are mostly independent of particle size.

Ahrens investigated the influence of the shape and size of the sample hole and the position of the thermocouple placed in the hole on the nature of the differential heating curve. According to him, the size and shape of the hole have a significant effect on the peaks of reactions accompanied by weight changes (for example, those associated with dehydration), but have little effect on the peaks resulting from phase changes. Thus, a deep, narrow hole enhances the endothermic reaction during illite dehydration. In the low-temperature region, heat transfer occurs mainly through conduction; in the high-temperature region - due to radiation. When the thermocouple junction is placed deep in the sample, in the region of low temperatures, rather sharp endothermic peaks appear on the differential curves, and in the region of high temperatures, flat endothermic peaks appear. Exothermic reactions appear more clearly when the thermocouple contact is immersed deep into the sample.

According to Norton, Spiel et al., the slower the heating occurs, the wider the peak and the lower the corresponding temperature (Fig. 82). As the heating rate increases, the amount of time required to reach the endothermic and exothermic peak temperatures increases, the height of the peaks increases, and the temperature range over which the reaction occurs. According to Spire, the area under the curve of a given reaction and the temperature at which the reaction begins do not depend on the heating rate. Ahrens found some variation in the area under the curves depending on the heating rate. Many researchers have shown that the most favorable heating rate is 10 to 15° per minute. Slower heating reduces the severity of reactions, and faster heating leads to the appearance of a number of overlapping reactions, especially in samples that consist of a mixture of clay minerals. The heating rate must be the same, since even the slightest changes in it affect the differential curve.

In order to obtain reproducible results over a large number of samples, the oven air temperature must be stable. Samples subject to oxidation must be prepared and placed in the oven under the same oxidation conditions.

Rowland and Jonas showed how factors other than furnace temperature affect the oxidation behavior of siderite and hence the differential heating curves, such as changes in particle size, sample placement, method of crucible closure, different block coatings, and sample solubility (Fig. 83). ).

Ahrens noted that increasing the partial pressure of steam in the furnace can cause a significant delay in the dewatering reaction. Rowland and Lewis noted the large influence of CO2 from the atmosphere of the heated furnace on the initial dissociation temperature when heating carbonates.

When studying clays containing carbonates, the furnace atmosphere is usually filled with an inert gas to prevent the oxidation reaction, which often results in a long-lasting and strong exothermic effect that masks other thermal reactions in the sample.

Spiel et al. constructed a series of curves for kaolinite mixed with varying amounts of inert material. They showed that the size and temperature of the peak decrease with decreasing amount of kaolinite. Thus, the peak temperature is not an absolute value, but depends, among other things, on the amount of substance present in the mixture.

Grim showed that the nature of the differential heating curves for mixtures of certain minerals depends to some extent on the interpenetration of the mixed components. The heating curves obtained for artificial mixtures consisting of particles with a diameter of several microns or larger differ significantly from the curves for mixtures consisting of finely interlayered much smaller particles found in nature. Therefore, reference curves obtained for artificial mixtures of clay minerals are often completely unsuitable for comparison with the curves of natural clays. In general, with increasing interpenetration of particles in mixtures, the intensity and clarity of thermal reactions for individual components decreases.

Careful preparation of the material for analysis and the accuracy of its implementation make it possible to obtain high-quality curves, often allowing even quantitative determinations.

From the above it is clear that the accuracy of quantitative determinations based on thermal analysis data is limited. Accuracy varies depending on the analyte, but for many substances it is still possible to achieve accuracy within 2 to 5%. Quantitative determinations are made based on the analysis of areas under the peaks that correspond to the thermal reactions of individual components. In some cases, it is very difficult to measure the space under the curves because the reaction does not start and stop suddenly and there is no clear beginning and end of the peak in the curve. Berkelheimer and Dehn proposed special methods for measuring areas under the peaks; these methods are applicable for reactions of medium intensity, and therefore also for fairly clear thermal peaks of medium size.

The sensitivity of the thermal method varies for different materials depending on the intensity of their thermal reactions. Hydrates such as hydrargillite, which have sharp thermal reactions, can be detected at a content of less than 5% in the sample, while micas, due to the low intensity and lack of sharp thermal reactions, can be detected in the sample only at a content of 10 to 15%.

This is the second article in the series “Cryptography at gunpoint”. Also worth reading:

Have you ever thought that the physical parameters of a computing device change during the execution of an algorithm? Moreover, these changes can be used to determine the execution step of the algorithm and even the processed data, including secret keys. If not, then this article is for you. She will tell you how, by measuring the energy consumed, you can “photograph” the execution of a cryptographic algorithm and how to obtain cipher keys from these images.Instead of introducing

A person constantly uses the effects that appear during the interaction of objects to judge the properties of the objects themselves. Using this approach, for example, the structure of the atom was discovered. At the beginning of the 20th century, it was not possible to see the atom itself, so its structure was represented in the form of a “raisin bun”, where electrons acted as raisins. This model was used as the main one until Rutherford and Geiger conducted an experiment on the scattering of alpha particles in thin plates. The experiment did not allow us to see the structure of the atom, but based on the secondary effect, scientists were able to guess that the “raisin bun” model did not work. Another obvious example is calculating the volume of a body of arbitrary shape. The simplest thing that can be done is to lower such a body into water and calculate the volume based on the new water level. Similar methods can be used to break cryptographic algorithms.

In cryptography, there is a whole class of attacks called side-channel attacks, which use the physical parameters of a computing device to determine cipher keys. The basics of the attacks were discussed in the previous article (“Cryptography at Gunpoint,” #189), where the secret key of the DES algorithm was determined by the operating time of the entire cipher. If you haven't read it, I highly recommend doing so, because it explains the mathematical component of the attack, namely Chebyshev's law of large numbers and the correlation coefficient. In this article we will not go back to the basics, but will focus more on microelectronics and statistics.

Tell me how you eat and I'll tell you... what you ate

To broaden our horizons, this time we will use the AES-128 algorithm (a description of which can be found). The cipher code was taken from the Internet and executed on an 8-bit STM8 Discovery microcontroller. The implementation of AES in question does not have the vulnerabilities discussed in the previous article, so we will assume that you have not yet found how to crack this cipher.

As we have already said, the execution of the algorithm changes the properties of the computing device. If you still don’t believe this, then look at fig. 1 and tell me if you see AES. It shows the input voltage measurement entire microcontroller, which is usually denoted as Vdd. This voltage is used to operate all STM8 blocks, including the CPU, memory, I/O devices and other subsystems. The measurement was made using a Picoscope 3207A digital oscilloscope with a bandwidth of 250 MHz. In this case, the interval between two points is 352 ns, and there are only 19,886 points on the graph. Since the microcontroller frequency is 16 MHz (period 62.5 ns), the average voltage was measured for every 5th clock cycle, however, the rounds and even the operations of each round can be clearly distinguished (Sbox substitution table, MixColumn permutation, addition with key ). This oscilloscope allows you to reduce the interval down to 100 ps (however, in this case one measurement will contain about 70 million points).

Despite the fact that the AES algorithm is symmetric, it has a different number of basic operations: 11 additions with the key, 10 operations on the replacement table (Sbox), and only 9 operations on the MixColumn columns. In Fig. 2, 11 additions with a key are highlighted in red, 10 replacement operations in green, and 9 MixColumn operations in black. Copying or initialization may occur at the beginning and end of the algorithm, so they are highlighted in blue. In general, the measured voltage allows you to determine a lot:

- The beginning and end of the cipher, which allow you to determine the operating time of the entire cipher.

- The beginning and end of the work of each round, which again allows you to determine the time of the round.

- Operations of each round: addition with key, Sbox replacement table and so on.

In addition to showing the execution time of each operation of the AES algorithm, Fig. 1 should give you the idea that each individual group of instructions (and indeed each individual instruction) consumes its own amount of energy. If we learn to model the energy consumed during the execution of an instruction, and this energy depends on the value of the key and the parameters we know, then we can determine the correct value of the key. True, as always, we cannot do without a short theory, and in this case we need to figure out when and why energy is consumed.

Pugs and their nutrition

Most modern computing devices are created using CMOS (complementary metal-oxide-semiconductor) technology. The technology is remarkable in that the microcircuit consumes virtually no energy in a static state, that is, when no calculations are performed. This is done to save your wallet and take care of the environment, since the materials for this technology (mainly silicon) are widely available. Energy in this device is consumed only at the moment of transaction, that is, when 1 is replaced by 0 or 0 is replaced by 1. For example, if two stable signals are supplied to the inputs of a logic element AND, then the logic element does not consume energy (well, only a little). If at least one input value changes, the transistors switch, which requires energy. Once again: if stable, unchanging signals were supplied to the input of element AND for a minute, then it did not consume energy, but if during that minute at least one of the input signals changed, then at the moment of the change, energy was spent on “recalculating” the output value. Thus, logical elements are one of the energy consumers.

In the microcircuit, in addition to logical elements, there are also registers that store intermediate calculation values. Unlike logic gates, registers require a clock signal to operate, which will synchronize operations on the chip. The clock signal is usually a square wave of a fixed frequency, for example, the STM8 Discovery uses 16 MHz, and modern processors from Intel and AMD are capable of operating above 3.5 GHz. Register switching occurs as follows: a signal from the logic elements is supplied to the first input of the register; this signal must be received in advance and should no longer be updated at a given clock cycle. A clock signal is supplied to the second input of the register; at the moment when the clock signal switches from low to high value, the register is rewritten and, accordingly, energy consumption occurs. Therefore, the second and main source of energy consumption is memory registers.

Pugs and their behavior

In Fig. 3 is a schematic representation of a system of any instruction or any hardware design. There are general purpose registers R1 and R2, which store intermediate calculation values. There is a “cloud” of logical elements that allows you to perform certain operations (addition, multiplication, shift operations, and so on). The logic cloud, as well as the general purpose registers, is controlled by special purpose registers. They determine which operation will be performed and at what moment.

Suppose we want to add the value of registers R1 (source text) and R2 (key) and write the result to register R1. The special purpose registers are already loaded and have activated the required parts of the microcontroller. At the first cycle, both values of R1 and R2 are sent to the cloud, where they are added using logical elements. Since a new operation is performed, the state of the logic elements is updated as the signal from R1 and R2 propagates, and this causes energy consumption. Then, when all the logic elements have been updated and the result of the addition has been sent to the R1 input, the system freezes and no power is consumed until a clock signal arrives at the R1 register. At this point, the register was updated, and immediately the new value was sent to the logic cloud, thereby causing a new spike in energy consumption. If a different instruction is executed, you may see a different spike shape (look at the patterns in Figure 2, highlighted in different colors), since other logic elements will be involved.

The timing of updating general purpose registers is very important. First, at this point the greatest energy consumption occurs, since the updated register value causes further switching of logic elements. Secondly, due to the stable frequency of the oscillator, all operations are performed at the same time, so the measured voltage will be synchronized. I want to say that for two different executions of the same code, the system at time t will be in the same state, that is, the signal will be processed by the same logic elements. This may be difficult to understand, but you will see later why it is important.

In this explanation, it is important for you to remember that the greatest energy consumption occurs at the moment of register switching and all voltage curves are synchronized in time.

Now we'll see how to use this knowledge to calculate the key. We will analyze only one, the very first method of attack, and we will consider some important improvements to this method in the next article.

Differential nutrition analysis. Theory

The first attack through consumed energy was published by Paul Kocher in 1996, although, strictly speaking, he cannot be called the author of this method - at that time, attack technologies were actively discussed on the Fidonet. According to unofficial data, already at the end of the 80s of the last century, our intelligence services profiled the execution of each individual instruction of microcontrollers, that is, they could say which instruction corresponds to a given voltage curve (and the first foreign published works on this topic appeared only in the mid-2000s - look at Template Attacks), although, I repeat, the information is unofficial.

Differential power analysis is based on the fact that the switching energy from 0 to 1 is different from the switching energy from 1 to 0. This is a very minor assumption and I can safely say that it is true for 100% of semiconductor devices, that is, for all the gadgets that you use every day. At least there is rigorous evidence that this is indeed the case for CMOS technology (here is a book that explains this property of CMOS systems before the advent of power analysis).

Differential nutrition analysis takes place in several stages. First, the target register is determined, that is, the instruction whose result you will attack. Read it carefully again, you will not be attacking the instruction itself, but its result, that is, the value written to the register. The target register can be used multiple times, and as you will see, this will affect the attack. The result of the instruction must depend on the data you know (source texts or ciphertexts) and on the unknown key value. For AES-128, it is common to use operations associated with a single substitution table, Sbox, since in this case the key can be looked up byte by byte, plus Sbox is a non-linear operation, and it allows you to quickly discard invalid key values. During each encryption, the voltage waveform is measured, then using the known data and the unknown key, the value of the target register is calculated (how this is done is explained below). From this value, one bit is selected (for example, the first one), and all voltage waveforms are divided into two groups. The first group (group 1) includes those curves for which this bit is set to 1, the second group (group 0) includes those curves for which this bit is equal to 0. Then the arithmetic mean of each group is calculated and their difference is considered, which is why analysis is called differential. If the model and the key were correct, then a significant spike can be seen in the difference between the arithmetic means at the moment when the result of the simulated register was used. Now let's look at everything in more detail.

Differential nutrition analysis. All about AES

If the ciphertexts are available to us, then we can simulate the Sbox result of the last round. We know that the first byte of the ciphertext was calculated as follows: C(1) = Sbox xor K10(1) , where S9(1) is the first byte of the result of nine rounds, and K10(1) is the first byte of the last round key. According to the AES algorithm, the value of S9(1) must be obtained in order to calculate the final ciphertext value; it is impossible to skip the calculation of S9(1), simply because the algorithm is specified that way. We are working with an 8-bit microcontroller and an unprotected implementation of the AES algorithm, so it is likely that the value of S9(1) was received and stored first in a register (the value must be retrieved, and all results are first written to general purpose registers), and then on the stack to be used in the next round. Thus, we have decided on a target instruction that depends on both the key and the ciphertext, plus it is a non-linear operation, which helps in side-channel attacks.

Let's select the first bit of the value S9(1) = InvSbox[C(1) xor K10(1)] with which we will classify the voltage curves. The remaining bits can be used to improve/speed up the key calculation, but for now we will only work with the first bit.

Remember, we said that the energy of switching from 1 to 0 and from 0 to 1 is different. We can simulate the result that should be written to the register, but we do not know the previous value of the register, so we cannot determine for sure whether there was a switch or not. In fact, this is not necessary. We simply assume that the previous register value did not depend linearly on the new value. I'll try to explain with an example. We have N ciphertexts. Since the AES algorithm mixes and rearranges everything, then in about half of the cases out of these N ciphertexts our desired bit will be equal to 1, and in the other half it will be equal to 0. Let us now assume that the previous register value stored an intermediate “random” result of the cipher (the result of another Sbox, for example). When our simulated bit is 1 half the time, the previous register value was 0 (that is, a quarter of the time from N), and about a quarter of the time the switch will occur, and a quarter of the time it won't. The same with zero: on average, N/4 encryptions will switch from 1 to 0, and the rest will not switch (0 will overwrite 0). It turns out that among N encryptions there will be N/4 switches from 0 to 1 and approximately the same number of switches from 1 to 0.

If the previous value of the register was constant, for example, a loop counter was written in it, then it is always equal to either 1 or 0. In this case it is even simpler, since one of the two groups created by the simulated bit will always switch, and the other will never.

If the previous value of the register depended linearly on the new value, then a situation could arise where in group 1 there were only a very limited number of switches, which was slightly less than the number of switches in group 0. In this situation, the number of switched and not switched bits was would not be balanced and the difference between the arithmetic means would be useless. It is in order to avoid linearity that the result of Sbox is used.

According to Chebyshev's law of large numbers, the arithmetic mean of group 1 in moment of execution of the target instruction will give you a constant plus the energy of switching from 0 to 1, and the arithmetic mean of group 0 at the same moment in time will give you the same constant plus the energy of switching from 1 to 0. Since we know that the switching energies from 0 to 1 and from 1 to 0 are different, the difference in the arithmetic averages will give you a surge at the time the instruction is executed.

Let's look at why all other points on the difference of arithmetic means will tend to zero. This is Chebyshev's law again: since we sorted the curves using our target register, then most likely all other instructions will be random fall into both groups, therefore, the arithmetic mean of the two groups for all other instructions will converge to the same value. Thus, the difference of the arithmetic means will converge to zero at all points, with the exception of instructions that in one way or another depend on the selected bit of the target register. Sometimes, however, you can encounter “ghostly” bursts. They occur when a bit in the target register affects further calculations, but ghost spikes can be used for good if you understand where they come from.

Differential nutrition analysis. Practice

Let us finally move from theory to practice. Using the same oscilloscope, the voltage was measured for 10 thousand encryptions. To remove noise, each encryption was performed 1000 times and the voltage was averaged. The sampling has been doubled so that each voltage trace contains 40,500 points. We will attack the operation using the register value S9(1) = InvSbox[C(1) xor K10(1)] . As you will see later, there are several such operations. To do this, we will use the first byte of each ciphertext and calculate the register results for all encryptions and all possible key byte values (see table).

Based on the values from column 4 (the first bit S9(1) for key 0x00) of the table, we will select into group 1 all encryption voltage curves for which the target bit S9(1) is equal to 1, and into group 0 - all encryption voltage curves for for which this bit is equal to 0. Now let's construct the difference between the arithmetic means of the two groups. Let's perform exactly the same operation for the remaining 255 keys and plot their graphs, as shown in Fig. 4. As you can see from this figure, one key has a significant spike near the end of the encryption, a larger view of which is shown in Figure 4. 5.

On it we see three splashes (they are numbered from 1 to 3). I would explain the third peak by the fact that the value of S9(1) is read from the stack to calculate Sbox, since it is in the Sbox execution zone of the last round (from 6200 to 6420 - this is the Sbox and Shift Rows zone). But the two previous peaks are a little more difficult to explain. The second peak is associated with the addition operation with the key when the value of S9(1) was directly obtained, and the very first peak is associated with the MixColumn operation (since it is in the MixColumn zone). It is important to understand here that addition with a key is a linear operation, and if the key bit is 1, then before addition with the key the value of the bits from the table was exactly the opposite. If the key bit is 0, then the bits before addition with the key were exactly the same. Before addition with the key, the byte value must be obtained after the MixColumn operation, and it is this moment when the byte of our key is received that we see on the graph. Since the peak is directed in the opposite (negative) direction, then, most likely, groups 1 and 0 swapped places (we subtract the larger from the smaller), that is, in group 1 there were all encryptions for which the bit was set to 0, and in group 0 all encryptions for which the bit is set to 1. This is possible if the key bit is 1, since in this case our model from the table will be strictly opposite and this will lead to the peak being negative.

To find a key, we usually plot the maximum values for the key, as shown in Fig. 6. It can be seen that the key value 208 = 0xD0 is the largest, and this key is most likely correct.

For the sake of comparison, we will plot the same graphs, but we will select the eighth bit of the S9(1) value (the least significant bit) as the target bit. According to previous calculations, this bit should be equal to 0, so in Fig. 8 we should see the first peak in the positive zone, and not in the negative, as was the case for the first bit. Also, we should get the same key, because it did not change, but only the bit for the attack changed. All peaks should be at the same moments in time, because the operation itself did not change the place. Pictures 7–8 turned out according to our hypotheses, plus the maximum value of the mean difference was obtained for the same key value on different target bits, so, most likely, we found the correct key byte (the microcontroller had a key taken from the AES standard , so you can check all its bytes).

In a similar way, you can recover all the remaining bytes of the last round key. Many works explain how to speed up/simplify/improve the attack algorithm, but the main thing for you now is to understand the basis of this process. We'll look at some improvements in the next article.

What to see?

I'm sure you still have many questions about the attack itself. I suggest you look for answers on the Internet. To do this, you can use scholar.google.com and the keywords: differential power analysis, power analysis attacks. There is a special website dpacontest.org that holds competitions on the speed and accuracy of using side-channel attacks. This site has code examples and a lot of attack data. Well, keep an eye on various events in Russia, where workshops on these attacks are given. I also advise you to take a look at the materials of conferences such as COSADE, CHES and CARDIS.

Conclusion

Nothing happens without a trace, including the execution of cryptographic algorithms. During the execution of ciphers, information leaks through secondary channels, such as energy consumption. To perform a calculation requires energy, so it is impossible to completely protect against side-channel attacks; this problem is fundamental. The article shows how the attack actually works and how to find the encryption key using the example of AES-128 executed on the STM8 microcontroller. To find the key, a minimum of information about the energy consumption model was used, but it was enough to successfully crack the algorithm. The article demonstrates one of the first attacks created in 1996, and since then, side-channel analysis has evolved significantly. Partially improved attack methods will be discussed in the next article, so, as usual, stay tuned...

He slept with children and dragoned his enemies

He slept with children and dragoned his enemies Differential analysis Differential studies

Differential analysis Differential studies Merovingian and Carolingian dynasties

Merovingian and Carolingian dynasties